On 5 November 2025, the Central Institute for Occupational and Maritime Medicine (ZfAM) in Hamburg hosted a key workshop marking the next development phase of the AI-healthy ship project. Following the successful first onboard assessment, the focus shifted to the project’s core: designing the machine-learning system that will become the predictive AI engine of the initiative.

The workshop brought together the ZfAM research team and the Lionizers development team, responsible for major parts of the technical implementation. Convis Consult & Marketing GmbH moderated the session.

From data points to a learning system

The goal was to define the architecture of a predictive AI that can identify individual patterns from sensor, app, and contextual data—and derive personalized recommendations to support seafarers’ wellbeing onboard. Across several interactive work blocks, participants aligned current findings, key technical parameters, and ethical requirements.

Key outcomes from the three workshop blocks

Block 1 – Review and insights from the first onboard assessment (ZfAM)

ZfAM presented major learnings from the first sea trial. The SeaWell app was successfully installed and used on 17 of 20 available devices. Data collection included both sensor data (e.g., noise, movement, sleep duration) and self-reported wellbeing assessments.

The team concluded that technical stability is largely in place, while there is still room to improve user guidance and motivation. Differences in digital affinity within the crew, as well as cultural expectations—such as the desire for more personalized content—were found to influence app usage. A key takeaway: future deployments must make installation and setup significantly easier, so the system can run reliably without an accompanying support team. These first datasets provide the foundation for designing predictive evaluation and the structure of the ML model.

Block 2 – Technical architecture, parameter evaluation, and ML model approach (Lionizers)

This section focused on methodology and implementation. The group reviewed all available parameters—from sensors and wearables to in-app surveys—to identify the variables most relevant for the AI-healthy ship ML model and to estimate the minimum dataset size required for valid training.

A preliminary conclusion: around 10,000 to 15,000 complete event datasets (combining sensor, app, and contextual data) are needed to produce robust predictive insights.

Different architecture options were discussed, ranging from rule-based approaches to fully data-driven methods. The group agreed to develop the ML model using structured training data generated through a rule-based “mini-model”.

A central result was the planned Human-in-the-Loop (HiTL) approach: the crew evaluates proposed interventions, while scientists analyze and validate the feedback and continuously refine the model throughout the project.

Block 3 – Ethics, transparency, and explainability (ZfAM)

The final block consolidated requirements for data protection, consent, transparency, and explainability for AI-supported systems and adapted them to the project context. Priorities include audit-proof documentation of consent, the use of Explainable AI (XAI) to make recommendations understandable, and a clear separation between automated suggestions and human judgement. Transparency is considered both a regulatory requirement and a key factor for crew trust and sustained adoption.

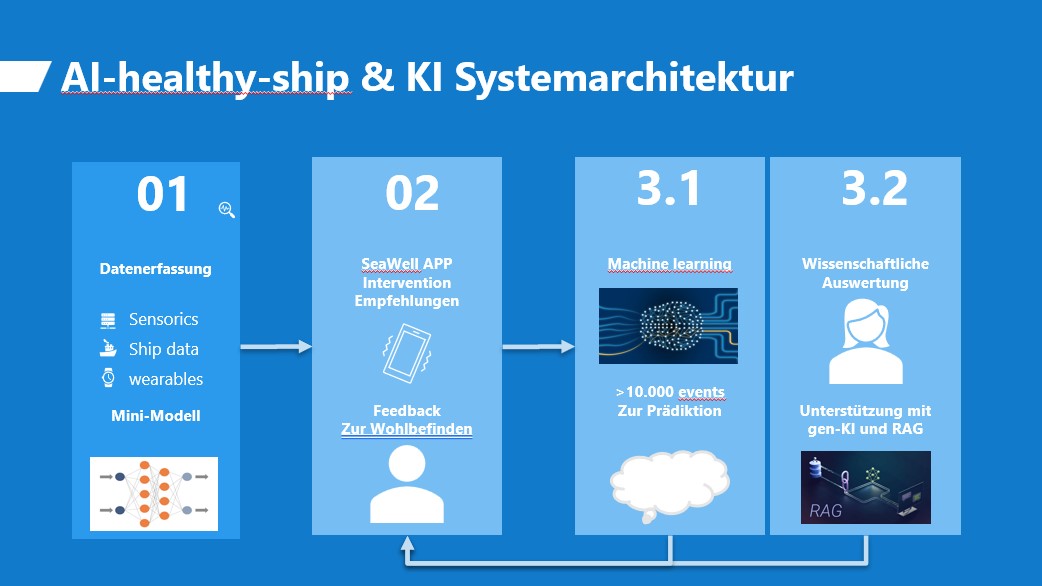

Predictive AI concept: four connected modules

The workshop results were merged into a unified system concept describing a continuous learning loop:

- Data collection & mini-model

Sensors, wearables, and ship data continuously capture information on noise, movement, sleep, and environment. A rule-based mini-model tests initial relationships and lays the groundwork for later ML training. - SeaWell app & crew feedback

The crew receives personalized health recommendations and rates their usefulness, motivation, and perceived impact on wellbeing. This feedback is collected in real time and fed back as training signals. - Machine learning

The system links defined “events”—structured units combining sensor, app, and contextual data—to detect patterns that may indicate states such as fatigue. The model gradually learns which factors correlate with specific states and which measures are likely to help in which context. - Science & AI training cycle

Researchers interpret model outputs, validate findings, and identify new influencing factors. Insights flow into the next training cycle—creating an iterative loop between practice, AI, and research.

Next steps

After this intensive workshop, the consortium is preparing the second onboard assessment. The focus is on transferring the latest insights into reliable field operation:

- Improve installation and data synchronization so the app and sensor system can run onboard without a support team

- Further develop the mini-model to enable first predictive tests—allowing the AI to begin learning from crew data

- Build new export and analysis functions to ensure consistent data formats across sources—crucial for efficient and reliable ML training later on

Glossary (short)

- Machine Learning (ML): AI methods that learn patterns from data to generate predictions or recommendations.

- Predictive AI: An AI system that anticipates future states or events—here: crew wellbeing onboard.

- Human-in-the-Loop (HiTL): People actively shape AI learning through feedback, evaluation, and validation.

- Event: A structured learning unit combining sensor data, app interactions, and crew feedback.